This book is targeted at an audience who has already had an intro to linear algebra course, but may not have the sense that they’ve mastered the topic. The intended audience may not even remember linear algebra well. For those readers, we have some review sections in the appendix. (The section on bra-ket notation is not usual covered in an intro course, but is also indispensable for the material.)

You’d normally spend a lot of time in an intro to linear algebra class doing calculations, including reduced row echelon form; inverses; eigenvalues and eigenvectors; and Cramer’s rule. We skip these topics, even in the review section. This book covers a lot of applications of linear algebra, and we accept that many of the interested readers will be practicioners. So we don’t need to pretend that these things can’t be easily calculated with a computer program. I won’t go so far as to say that there’s no educational value in doing some of these calculations by hand, but given that you’ve successfully completed a linear algebra class, I give you permission to forget them.

This book attempts to put linear algebra concepts in a more intuitive context. As such it covers most topics that could be called linear algebra. But it doesn’t cover everything. Some very rich and interesting advanced topics that are not covered here include:

Abstract vector spaces, function spaces, infinite-dimensional spaces

Tensors and multi-linear algebra

Group representations

Finally, some good news for some of you. I’ve written this book to avoid talking about complex numbers. It’s surprising, but I’ve pulled it off. With the approach I’ve taken, considering complex numbers only adds a bunch of pesky qualifiers to any statement I make. So throughout the book, we’ll pretend that these don’t even exist.

Chapters labeled [WIP] are not available currently.

We start the book with the most remarkable fact of linear algebra: For any matrix \(A\), there exists a singular value decomposition (SVD). The SVD of a matrix \(A\) is three matrices, \(U\), \(D\), and \(V\), where

\(U\) and \(V\) are orthogonal,

\(D\) is diagonal, and

\(A=UDV^T\).

(See Section [Orthogonal Matrices] for a review on orthogonal matrices.)

If \(A\) is dimension \(m\times n\), then \(U\) is dimension \(m\times m\), \(V\) is dimension \(n\times n\), and \(D\) is dimension \(m\times n\). \(D\) is zero everywhere expect for potentially \(D_{ii}\) for \(1\leq i\leq\min(m, n)\). By convention, we sometimes call \(\lambda_i:=D_{ii}\). As well, we’ll sometimes call \(r:=\min(m, n)\).

Example

Let’s whip up an example real quick to demonstrate. We start with an array chosen arbitrarily and show that there is an SVD.

\[\left( \begin{array}{ccc} 1 & 1 & 2 \\ 3 & 5 & 8 \end{array} \right) = UDV^T,\]

where

\[\begin{array}{rcl} U &=& \left( \begin{array}{cc} -0.2381 & -0.9712 \\ -0.9712 & 0.2381 \end{array} \right)\\ \\ D&=&\left( \begin{array}{ccc} 10.192 & 0 & 0 \\ 0 & 0.3399 & 0 \end{array} \right)\\ \\ V^T&=&\left( \begin{array}{ccc} -0.3092 & -0.4998 & -0.8090 \\ -0.7557 & 0.6456 & -0.1100 \\ -0.5774 & -0.5774 & 0.5774 \end{array} \right) \end{array}\]

This calculation was done with a computer; the numbers are rounded here. The reader should verify that:

The matrices \(U\), \(D\), and \(V^T\) multiply to the original matrix.

The two outside matrices are orthogonal. That is, the rows are orthonormal.

At this point, this is a neat party trick. It’s not at all clear, but such a decomposition can be found for any matrix

The SVD is generally not unique for a given a matrix. This fact causes difficulties. Because the SVD is not unique, we usually say that a decomposition is a SVD and not the SVD. We show later in the chapter that SVDs are almost unique. Often we usually only need any SVD.

Example

To give an example of non-uniqueness, let’s look at the matrix:

\[\left(\begin{array}{ccc}1&0&0\\0&0&0\\0&0&0\end{array}\right)\]

This can be decomposed as:

\[\left(\begin{array}{ccc}1&0&0\\0&1&0\\0&0&1\end{array}\right) \left(\begin{array}{ccc}1&0&0\\0&0&0\\0&0&0\end{array}\right) \left(\begin{array}{ccc}1&0&0\\0&1&0\\0&0&1\end{array}\right)\]

Or as:

\[\left(\begin{array}{ccc}1&0&0\\0&\sqrt{1/2}&\sqrt{1/2}\\0&\sqrt{1/2}&-\sqrt{1/2}\end{array}\right) \left(\begin{array}{ccc}1&0&0\\0&0&0\\0&0&0\end{array}\right) \left(\begin{array}{ccc}1&0&0\\0&\sqrt{1/2}&\sqrt{1/2}\\0&\sqrt{1/2}&-\sqrt{1/2}\end{array}\right)\]

If you follow the multiplication through, you can see that for the outside matrices, everything except the first row and column get ignored. We must choose orthonormal vectors for the other two rows and columns in order to satisfy the orthogonality condition of the SVD. But outside of that constraint, we’re free to do whatever we want with these other rows and columns.

Low-rank matrices are one way that uniqueness can fail, but not the only way. See Section 2.3 for a more complete discussion.

Soon we’ll show that the SVD exists. Said another way: We’ll show there exists bases in the domain and range for which \(A\) is diagonal. (See Section 9.3 for a review of this concept.) On its face, this is a simple-yet-powerful theorem that doesn’t have the usual qualifiers seen in linear algebra. It doesn’t require complex numbers, and it’s one of the few theorems that apply cleanly to rectangular matrices. But beyond these surface advantages, we’ll see that the SVD is widely applicable. In fact, it’s so applicable that we make this a cornerstone of the book. The SVD is critical to understanding linear algebra, because it expresses something fundamental about linear transformations.

After a brief detour to discuss bra-ket form, we’ll spend the rest of this section exploring that fundamentality. We will view the SVD through three major lenses: Firstly by viewing matrixes as a collection of eigenvectors and eigenvectors. Then by viewing matrixes as the product of vectors and covectors. Finally by viewing matrices as bilinear forms.

This section uses Bra-ket Notation heavily. If you’re unfamiliar with this form, please read Section [Bra-ket Notation] before proceeding.

An often more-convenient way to write the SVD is:

\[A=\sum_{i=1}^r\lambda_i| u _i\rangle\langle v _i|\]

Here \(| u _i\rangle\) are the columns of \(U\) and \(\langle v _i|\) are the rows of \(V^T\).

Theorem 2.1. Any matrix \(A\) can be written as: \[A=\sum_{i=1}^r\lambda_i| u _i\rangle\langle v _i|\]

Proof. Say \(A\) is \(m\times n\) dimensional with \(r=\min(m, n)\) as usual. We write the matrices as though \(m<n\), but other cases work out the same way. Write the SVD of \(A\) as:

\[A = UDV^T = \left[ \begin{array}{cccc} \rule[-1ex]{0.5pt}{2.5ex}& \rule[-1ex]{0.5pt}{2.5ex}& & \rule[-1ex]{0.5pt}{2.5ex}\\ |u_1\rangle & |u_2\rangle & \cdots & |u_m\rangle \\ \rule[-1ex]{0.5pt}{2.5ex}& \rule[-1ex]{0.5pt}{2.5ex}& & \rule[-1ex]{0.5pt}{2.5ex} \end{array} \right] \left[ \begin{array}{cccccc} \lambda_1 & 0 & \cdots & 0 & \cdots & 0 \\ 0 & \lambda_2 & \cdots & 0 & \cdots & 0 \\ \vdots & \vdots & \ddots & \vdots & \cdots & \vdots \\ 0 & 0 & \cdots & \lambda_r & \cdots & 0 \\ \end{array} \right] \left[ \begin{array}{ccc} \rule[.5ex]{2.5ex}{0.5pt}& \langle v_1| & \rule[.5ex]{2.5ex}{0.5pt}\\ \rule[.5ex]{2.5ex}{0.5pt}& \langle v_2|& \rule[.5ex]{2.5ex}{0.5pt}\\ & \vdots & \\ \rule[.5ex]{2.5ex}{0.5pt}& \langle v_n|& \rule[.5ex]{2.5ex}{0.5pt} \end{array} \right]\]

We can write the diagonal matrix as:

\[D = \sum_{i=1}^r \left[\begin{array}{ccccc} 0 & \cdots & 0 & \cdots & 0 \\ \vdots & \ddots & \vdots & \ddots & \vdots \\ 0 & \cdots & \lambda_i & \cdots & 0 \\ \vdots & \ddots & \vdots & \ddots & \vdots \\ 0 & \cdots & 0 & \cdots & 0 \\ \end{array}\right]\]

Each summand is the diagonal matrix with all but the \(i\)-th entry zeroed out. We distribute the \(U\) on the left and \(V^T\) on the right, to end up with:

\[\begin{array}{rcl} A &=& \sum_{i=1}^r\left[ \begin{array}{cccc} \rule[-1ex]{0.5pt}{2.5ex}& \rule[-1ex]{0.5pt}{2.5ex}& & \rule[-1ex]{0.5pt}{2.5ex}\\ | u _1\rangle & | u _2\rangle & \cdots & | u _m\rangle \\ \rule[-1ex]{0.5pt}{2.5ex}& \rule[-1ex]{0.5pt}{2.5ex}& & \rule[-1ex]{0.5pt}{2.5ex} \end{array} \right] \left[\begin{array}{ccccc} 0 & \cdots & 0 & \cdots & 0 \\ \vdots & \ddots & \vdots & \ddots & \vdots \\ 0 & \cdots & \lambda_i & \cdots & 0 \\ \vdots & \ddots & \vdots & \ddots & \vdots \\ 0 & \cdots & 0 & \cdots & 0 \\ \end{array}\right]\left[ \begin{array}{ccc} \rule[.5ex]{2.5ex}{0.5pt}& \langle v _1| & \rule[.5ex]{2.5ex}{0.5pt}\\ \rule[.5ex]{2.5ex}{0.5pt}& \langle v _2|& \rule[.5ex]{2.5ex}{0.5pt}\\ & \vdots & \\ \rule[.5ex]{2.5ex}{0.5pt}& \langle v _n|& \rule[.5ex]{2.5ex}{0.5pt} \end{array} \right] \\ &=& \sum_{i=1}^r\left[ \begin{array}{cccc} \rule[-1ex]{0.5pt}{2.5ex}& \rule[-1ex]{0.5pt}{2.5ex}& & \rule[-1ex]{0.5pt}{2.5ex}\\ | u _1\rangle & | u _2\rangle & \cdots & | u _m\rangle \\ \rule[-1ex]{0.5pt}{2.5ex}& \rule[-1ex]{0.5pt}{2.5ex}& & \rule[-1ex]{0.5pt}{2.5ex} \end{array} \right] \left[ \begin{array}{ccc} \rule[.5ex]{2.5ex}{0.5pt}& 0 & \rule[.5ex]{2.5ex}{0.5pt}\\ & \vdots & \\ \rule[.5ex]{2.5ex}{0.5pt}& \lambda_i\langle v _i|& \rule[.5ex]{2.5ex}{0.5pt}\\ & \vdots & \\ \rule[.5ex]{2.5ex}{0.5pt}& 0& \rule[.5ex]{2.5ex}{0.5pt} \end{array} \right] \\ &=& \sum_{i=1}^r\left[ \begin{array}{ccc} \lambda_i| u _i\rangle_1\langle v _i|_1 & \lambda_i| u _i\rangle_1\langle v _i|_2 & \cdots \\ \lambda_i| u _i\rangle_2\langle v _i|_1 & \lambda_i| u _i\rangle_2\langle v _i|_2 & \cdots \\ \vdots & \vdots & \ddots \end{array} \right] \end{array}\]

When you compare this final form to the definition of the outer product, then you see that it equals \(\sum_{i=1}^r\lambda_1| u _i\rangle\langle v _i|\). ◻

The reader should pause to appreciate that although the matrix is \(m\times n\), only \(r=\min(m, n)\) summands are needed. What happened to the remaining \(n-m\) rows of \(V^T\)?

Because of the orthogonality of \(U\) and \(V\), the set of vectors \(\left\{\langle v _i|\right\}\) form a basis of a subspace of the domain, and the covectors \(\left\{| u_i \rangle\right\}\) form a basis of a subspace of the codomain. (See Exercises [Ex-SVD Null Space] and [Ex-SVD Range] for a more precise statement.) The orthogonality of these vectors is very important; we use that fact constantly throughout the book.

To see why this form is convenient, we’ll show a quick proof that will be used later.

Lemma 2.2. Given an SVD, \(A=\sum_i\lambda_i|u_i\rangle\langle v_i|\), then

\[A^TA=\sum_i\lambda_i^2|v_i\rangle\langle v_i|\]

Proof. Transposition distributes over sums. As well \((AB)^T=B^TA^T\), so we have that each summand \(\left(|u_i\rangle\langle v_i|\right)^T=|v_i\rangle\langle u_i|\). So we can start the \(A^TA\) equation as:

\[\begin{array}{rcl} A^TA&=& \left(\sum_i\lambda_i|v_i\rangle\langle u_i|\right) \left(\sum_j\lambda_j|u_j\rangle\langle v_j|\right) \\ &=&\sum_i\sum_j\lambda_i\lambda_j|v_i\rangle\langle u_i|u_j\rangle\langle v_j| \end{array}\]

Now we look closer at the middle term \(\langle u_i|u_j\rangle\). Because the vectors \(|u_j\rangle\) are orthonormal, this product equals \(0\) when \(i\neq j\) and \(1\) when \(i=j\). So the double index which loops over all possible values of \(i\) and \(j\) ends up being non-zero for only those terms where \(i=j\).

\[A^TA = \sum_i\lambda_i\lambda_i|v_i\rangle\langle u_i|u_i\rangle\langle v_i| = \sum_i\lambda_i^2|v_i\rangle\langle v_i|\] ◻

We will call the decomposition \(\sum_i\lambda_i|u_i\rangle\langle v_i|\) the bra-ket form (or summation form of the SVD; the \(UDV^T\) form is called the matrix form of the SVD. The steps from above can be done in reverse to go from bra-ket form to matrix form.

Example

Given an SVD in bra-ket form \(A=5|u_1\rangle\langle v_1|+2|u_2\rangle\langle v_2|\), where

\[\begin{array}{rcl} \vec u_1 &=& (1, 0) \\ \vec v_1 &=& (\sqrt{1/2}, -\sqrt{1/2}) \\ \vec u_2 &=& (0, -1) \\ \vec v_2 &=& (\sqrt{1/2}, \sqrt{1/2}) \end{array}\]

Then \(A=UDV^T\) where

\[\begin{array}{rcl} U &=& \left( \begin{array}{cc} 1 & 0 \\ 0 & -1 \end{array} \right)\\ \\ D&=&\left( \begin{array}{ccc} 5 & 0 \\ 0 & 2 \end{array} \right)\\ \\ V&=&\left( \begin{array}{ccc} \sqrt{1/2} & -\sqrt{1/2} \\ \sqrt{1/2} & \sqrt{1/2} \end{array} \right) \end{array}\]

Notice that we can just slot the vectors and scalars into their corresponding spots. We end up with the matrix form of the SVD. In Exercise [Ex-Confirm SVD], you’ll be asked to confirm these calculations.

There is some caveat about rank here.

Example

Let’s continue the above example.

\[A=\left(\begin{array}{ccc}1&0&0\\0&0&0\\0&0&0\end{array}\right)\]

We can write this using the bra-ket form as:

\[A=\left(\begin{array}{c}1\\0\\0\end{array}\right)\left(\begin{array}{ccc}1&0&0\end{array}\right) = |e_1\rangle\langle e_1|\]

The right-most expression uses the convention that \(\vec e_i\) is a vector with a \(1\) in the \(i\)-th position and \(0\) elsewhere.

In most cases, the bra-ket form of a \(3\times3\) matrix will have three summands, but that didn’t happen for this example. We can think of this as having two extra terms with coefficient \(\lambda_2=\lambda_3=0\). In that case, you can get creative with what you choose \(|u_2\rangle\langle v_2|\) and \(|u_3\rangle\langle v_3|\) to be. As long as the vectors are orthonormal, this is a valid SVD. Filling in random vectors like this is necessary to convert back to the matrix form, as we did above. (See Exercise [Ex-Rectangular bra-ket to matrix].)

A basic fact about linear maps is that they’re often invariant for certain axes. That is, \(A\vec v=\lambda\vec v\) for some \(\vec v\) and \(\lambda\). This is a somewhat remarkable fact. For example, a map, \(A\), sends \((1, 0)\) to \((5, 1)\) and \((0, 1)\) to \((1, 5)\). Imagine picking up the x-y plane at these \((0, 1)\) and \((1, 0)\) and dragging them to their respective targets. As you drag these points, you stretch some parts of the plane and squeeze other parts. Yet the squeezing cancels out along the line \(y=x\), and this axis only gets stretched out radially. The second and fourth quadrants get stretched apart, causing a similar cancelation effect along the line \(y=-x\). This example is easy to visualize. Yet even when you do fairly complicated linear transformations with many dimensions, usually you’ll get some axes somewhere where all the pulling and pushing cancel out.

The fact that eigenvalues usually exist isn’t obvious, and it’s a fact that people can’t shut up about. If you remember one thing from linear algebra, it’s probably eigenvalues. Eigenvalues and eigenvectors are important. But talking about eigenvalues is difficult and requires a lot of caveats. Eigenvalues only exist for square matrices and they are sometimes complex. Defective matrices don’t have a "full set" of eigenvalues. (Defective matrices are covered much later in the book.) The SVD doesn’t have any of these qualifiers. We’ll see throughout this book that for many applications of eigenvalues, the SVD will work instead. Look to Section 2.5 for a couple of immediate examples.

It’s not clear yet why the SVD is analogous to eigenvectors. To demonstrate: Say a matrix \(A\) has a set of eigenvectors, \(|v_i\rangle\) that span the domain with corresponding eigenvalues \(\left\{\lambda_i\right\}\). Define a linear map \(P\) by the actions \(P|e_i\rangle=|v_i\rangle\), and define \(D\) as a diagonal matrix with \(\lambda_i\) as the \(i\)-th entry on the diagonal. Then \(A=PDP^{-1}\). This must be true because it’s true for every eigenvector, and ever vector is a linear combination of eigenvectors (because the span):

\[PDP^{-1}|v_i\rangle = PD|e_i\rangle = \lambda_iP|e_i\rangle = \lambda_i|v_i\rangle = A|v_i\rangle\]

This equality makes sense. \(P^{-1}\) maps the eigenvalues of \(A\) to the standard basis; \(D\) stretches invariantly along each of the standard basis; and \(P\) maps the standard basis back. The net effect is that the invariant stretching from \(D\) gets moved to the eigenvectors. An SVD, \(UDV^{-1}\), on the other hand rotates, stretches along standard basis, then rotates to (potentially) new rotation. (In the last sentence, we call \(V^T=V^{-1}\), which is true for all orthogonal matrices; we also called the orthogonal matrices "rotations" which is a good way to think of orthogonal matrices.) We can see that \(PDP^{-1}\) and \(UDV^{-1}\) are just two decompositions of matrices. The former, called a diagonalization, requires the outside matrices to be inverses, while the SVD requires the outside matrices to be orthogonal. Diagonalizations exist sometimes, whereas SVD exist all the time. We’ll see in the Section 2.4 that these two concepts are intimately connected. See Chapter [Symmetric Matrices] for a discussion of how the SVD is also a diagonalization.

One view of matrices is that they’re the product of vectors and covectors. The columns of \(A\) tell us how to act on standard basis vectors. Call the \(i\)-th column of \(A\) \(|A_{*i}\rangle\). Then,

\[A = \left[ \begin{array}{cccc} \rule[-1ex]{0.5pt}{2.5ex}& \rule[-1ex]{0.5pt}{2.5ex}& & \rule[-1ex]{0.5pt}{2.5ex}\\ |A_{*1}\rangle & |A_{*2}\rangle & \cdots & |A_{*n}\rangle \\ \rule[-1ex]{0.5pt}{2.5ex}& \rule[-1ex]{0.5pt}{2.5ex}& & \rule[-1ex]{0.5pt}{2.5ex} \end{array} \right] = \left[ \begin{array}{cccc} \rule[-1ex]{0.5pt}{2.5ex}& \rule[-1ex]{0.5pt}{2.5ex}& & \rule[-1ex]{0.5pt}{2.5ex}\\ A|e_1\rangle & A|e_2\rangle & \cdots & A|e_n\rangle \\ \rule[-1ex]{0.5pt}{2.5ex}& \rule[-1ex]{0.5pt}{2.5ex}& & \rule[-1ex]{0.5pt}{2.5ex} \end{array} \right]\]

Any vector \(\vec v=\begin{pmatrix} v_1 \\ v_2 \\ \vdots \\ v_n \end{pmatrix}\) can be written as \(|v\rangle = \sum_i v_i|e_i\rangle\). So to figure out the effect of \(A\) on \(|v\rangle\), we only need to find the \(i\)-th component of \(|v\rangle\) and multiple by \(|A_{*1}\rangle\). That is,

\[\begin{array}{rcl} A|v\rangle &=& A\sum_iv_i|e_i\rangle \\ &=& \sum_iv_iA|e_i\rangle \\ &=& \sum_i|A_{*i}\rangle v_i \end{array}\]

In the last line, we’ve written the coefficient after the covector to hint at the next step. The next step, we observe that \(\langle e_i|v\rangle=\sum_j\langle e_i|v_j|e_j\rangle = v_i\), where the last equality follows from the orthonormality of the standard basis. Now we can write the above equality as

\[\begin{array}{rcl} A|v\rangle &=& \sum_i|A_{*i}\rangle\langle e_i|v\rangle \end{array}\]

Comparing both sides, we can now say that \(A=\sum_i|A_{*i}\rangle\langle e_i|\). This rewriting recognizes that \(A\) is a sum of vector/covector products. The covectors \(\left\{\langle e_i|\right\}\) project onto the standard axes to get a scalar. Then the vectors \(\left\{|A_{*i}\rangle\right\}\) give direction to each of these scalars. This is an important way to understand matrices.

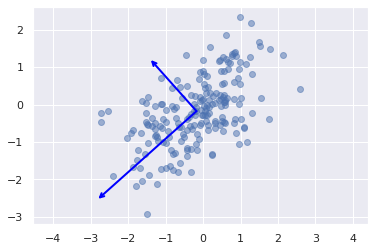

But by studying linear algebra, you learn that there’s nothing special about the standard basis \(\left\{|e_i\rangle\right\}\). For any basis \(\left\{| v _i\rangle\right\}\), we can write the matrix \(A\) with this basis on the domain. (See Section [Change of Bases] for more detailed discussion on bases.) Call the columns of this new matrix \(\left\{| u _i\rangle\right\}\), and it follows that \(A=\sum_i| u _i\rangle\langle v _i|\). Although \(\left\{\langle v _i|\right\}\) are orthonormal, it’s not usually true of the resulting \(\left\{| u _i\rangle\right\}\). The existence of an SVD is the existence of a domain basis \(\left\{\langle v _i|\right\}\) for which the corresponding \(\left\{| u _i\rangle\right\}\) are also orthogonal, but not normal. We can write \(|u_i\rangle=\lambda_i|u_i'\rangle\), so that \(\left\{u_i'\right\}\) are orthonormal. The basis \(\left\{\langle v _i|\right\}\) that produces orthogonal columns on \(A\) is a very special basis. There’s no reason to prefer to represent \(A\) in the standard basis or any other basis. So we might as well choose \(\left\{\langle v _i|\right\}\), giving \(A=\sum_i\lambda_i|u_i'\rangle\langle v_i|\), the SVD. We’ll see throughout the course of this book that it simplifies many things.

I want to revisit the equivalence of the two forms of the SVD with this new language. Given a \(UDV^T\) representation of a matrix, \(A\), we can write \(V^T=\sum_i|e_i\rangle\langle v_i|\), where the \(|v_i\rangle\) are the rows of \(V^T\), and we can write \(U=\sum_i|u_i\rangle\langle e_i|\) where the \(|u_i\rangle\) are the columns of \(U\). And of course \(D=\sum_i\lambda_i|e_i\rangle\langle e_i|\), where \(\lambda_i\) are the diagonal components of \(D\). Now we have

\[A=UDV^T=\left(\sum_i|u_i\rangle\langle e_i|\right)\left(\sum_j\lambda_j|e_j\rangle\langle e_j|\right)\left(\sum_k|e_k\rangle\langle v_k|\right)=\sum_i\lambda_i|u_i\rangle\langle v_i|\]

where the last equality follows from the orthonomality of \(|e_i\rangle\).

In this section we continue to build intuition.

Coordinate Systems Consider a basis, \(\mathcal B\), of \(\mathbb R^3\) given by \(\vec v_1=\langle 1/\sqrt2, 1/\sqrt2, 0\rangle\), \(\vec v_2=\langle 1/\sqrt2, -1/\sqrt2, 0\rangle\), \(\vec v_3=\langle 0, 0, -1\rangle\). Because \(\mathcal B\) is a basis, we know that any vector in \(\mathbb R^3\) can be written as a linear combination of of these three vectors. For example, \(\langle 1, 2, 3\rangle = 3/\sqrt2 \vec v_1-1/\sqrt2\vec v_2-3\vec v_3\). Occasionally, this linear combination is written in its own vector notation, with a special \(\mathcal B\) subscript. \(\langle 1, 2, 3\rangle=\langle 3/\sqrt2, -1/sqrt2, -3\rangle_{\mathcal B}\).

The purpose of this short section is to make the connection that both \(\langle 1, 2, 3\rangle\) and \(\langle 3/\sqrt2, -1/sqrt2, -3\rangle_{\mathcal B}\) are equally legitimate representations of the same vector. This is a conceptual decoupling between a vector and the coordinates we use to write it. The observation is akin to the realization that the number \(14\) can be written in binary as \(1110\); the number itself is not either of these things, but is merely represented by them.

And if any choice of coordinates are equally legitimate, we may as well choose the bases given by the SVD, \(M=\sum_i|u_i\rangle\langle v_i|\). That is, if \(\mathcal B_u=(|u_1\rangle, |u_2\rangle, \cdots, |u_n\rangle)\) and \(\mathcal B_v=(|v_1\rangle, |v_2\rangle, \cdots, |v_n\rangle)\) then \(M\langle x_1, x_2, \cdots x_n\rangle_{\mathcal B_u}=\langle \lambda_1x_1, \lambda_2x_2, \cdots, \lambda_nx_n\rangle_{\mathcal B_v}\). So the existance of an SVD says that any matrix is merely a dillation under the correct coordinate systems. Quite a surprising fact!

We can take this notation a bit further even.

Functions on Coordinate Systems You and two friends, Leeta and Odo, are doing some classical physics problem, and have to draw your own axes to solve the problem. You correctly label the canonical \(x\) and \(y\) axes, \(\langle 1,0\rangle\) and \(\langle 0,1\rangle\). But Leeta is looking at the problem from an angle, so she ends up with axes \(\langle 1/\sqrt2, 1/\sqrt2\rangle\) and \(\langle -1/\sqrt2, 1/\sqrt2\rangle\). And Odo is left-handed, so he ends up with axes \(\langle 0,1\rangle\) and \(\langle 1,0\rangle\). As an intermediate step, the problem requires you to write down a rotation matrix. Because the axes are made up by the solver, they shouldn’t affect the ultimate solution. So you can write the rotation in any basis. When you use the canonical basis \(\mathcal B\), you get the usual rotation matrix \(R_{\mathcal B\mathcal B}=\left(\begin{array}{cc}\cos\theta & -\sin\theta \\ \sin\theta & \cos\theta\end{array}\right)\). When Leeta calculates the same matrix in her basis, \(\mathcal B_L\), she too ends up with \([R]_{\mathcal B_L\mathcal B_L}=\left(\begin{array}{cc}\cos\theta & -\sin\theta \\ \sin\theta & \cos\theta\end{array}\right)\). Note the notation on the matrix, which means that both the domain and range are written in Leeta’s coordinates, \(\mathcal B_L\). Now Odo’s a little odd, so in his coordinates, \(\mathcal B_O\), counterclockwise rotation rotates his \(y\)-axis towards his \(x\)-axis, and he gets \([R]_{\mathbb O\mathbb O}=\left(\begin{array}{cc}\cos\theta & \sin\theta \\ -\sin\theta & \cos\theta\end{array}\right)\).

As you may guess, it’s also possible to write down the rotation matrix where the input is in one coordinate system and the output is in another. For example \([R]_{\mathcal B_L\mathcal B_O}=\left(\begin{array}{cc} \cos\left(\theta-\pi/4\right) & \sin\left(\theta-\pi/4\right) \\ -\sin\left(\theta-\pi/4\right) & \cos\left(\theta-\pi/4\right)\end{array}\right)\).

If we have a change-of-basis matrix, \(C\) that maps our coordinates to Leeta’s then we could write, for any matrix, \(M\), \([M]_{\mathcal B\mathcal B}=C^{-1}[M]_{\mathcal B_L\mathcal B_L}C\). The change-of-basis matrix itself can be written as \(C=[I]_{\mathcal B_L\mathcal B}\).

With this notation for matrices in different basis, the SVD of a matrix \(M\) is simply bases \(\mathcal B\) and \(\mathcal B'\) for which \([M]_{\mathcal B\mathcal B'}=I\).

Linear forms are defined for matrices generally. But we’ll define a linear form as a function \(f:\mathbb R^n \to\mathbb R\) that is "linear" meaning that it satifies the two properties:

\(f\left(\vec x+\vec y\right)=f\left(\vec x\right) + f\left(\vec y\right)\) for all \(\vec x,\vec y\in\mathbb R^n\).

\(f\left(\lambda\vec x\right)=\lambda f\left(\vec x\right)\) for all \(\lambda\in\mathbb R\) and \(\vec x\in\mathbb R^n\).

We state without proving that all linear forms can be written as \(\lambda\langle u|\) for some unit vector \(\vec u\), meaning that \(f\left(|x\rangle\right)=\lambda\langle u|x\rangle\).

By the definition of linear forms, the sum of any two linear forms is itself a linear form. It’s interesting, though not surprising that the sum of two covectors \(\lambda\langle u|\) must therefore result in a new covector, so that it can also be written this way.

By analogy a bilinear form is a function \(f:\mathbb R^m\times\mathbb R^n \to\mathbb R\) that satisfies linearity for both inputs:

\(f\left(\vec x+\vec y,\vec z\right)=f\left(\vec x,\vec z\right) + f\left(\vec y,\vec z\right)\) for all \(\vec x,\vec y,\vec z\in\mathbb R^n\).

\(f\left(\vec z,\vec x+\vec y\right)=f\left(\vec z,\vec x\right) + f\left(\vec z,\vec y\right)\) for all \(\vec x,\vec y,\vec z\in\mathbb R^n\).

\(f\left(\lambda\vec x,\vec y\right)=\lambda f\left(\vec x,\vec y\right)=f\left(\vec x,\lambda\vec y\right)\) for all \(\lambda\in\mathbb R\) and \(\vec x,\vec y\in\mathbb R^n\).

We also state without proof that all bilinear forms can be written as a matrix, \(A\), so that \(f\left(\vec x, \vec y\right)=\langle x|A|y\rangle\). Conversely all matrices yield a bilinear form in this way. So maybe a good way to think about matrices is exactly as bilinear forms. In analogy to linear forms, say that a particular matrix \(A\) can be written as \(\lambda|u\rangle\langle v|\). Then it’s action on a pair of vectors \(\vec x,\vec y\) is \(\lambda\langle x|u\rangle\langle v|y\rangle\), which is a product of two linear forms. This seems like a useful analogy. But unlike linear forms, we can’t add together a bunch of matrices of the form \(\lambda|u\rangle\langle v|\) and still expect to get a matrix of the form \(\lambda|u\rangle\langle v|\). Instead you get a sum of such matrices, \(\sum_i\lambda_i|u_i\rangle\langle v_i|\). The surprising thing here is that if you added very many such matrices, you could always reduce to \(r:=\min(m, n)\) summands. Either way, this representation seems like a sensible way to represent a matrix.

The bra-ket form of the SVD yields a set of scalars \(\left\{\lambda_i\right\}\) and vectors \(\left\{\vec u_i, \vec v_i\right\}\). We can and do require that all of these be real. We also require that all the scalars be positive \(\lambda_i>0\). We can require this because:

If an SVD has a negative summand, \(\lambda_i|u\rangle\langle v|\), then we get a positive-only SVD by replacing that summand with \((-\lambda_i)|-u\rangle\langle v|\).

If an SVD has a summand with \(\lambda_i=0\), then we omit this and don’t count it as part of the SVD.

Finally we adopt the convention that these are ordered: \(\lambda_1\geq\lambda_2\geq\cdots\lambda_r\).

We prove in Section 3 that with our conventions, then multiset1 of scalars is unique to the matrix \(A\); that is, all SVDs of \(A\) yield the same multiset. We call these values the singular values of \(A\).

The goal of this book is to build intuition and demonstrate applications. With the intuition, the applications will seem natural. (You should agree when you read Chapter 2.) Hopefully by the end of the book, you’ll be able to recall or modify the techniques more easily. At this point, you should have some intuition for what an SVD is, but how somebody comes up with such a representation is still fairly abstract. The rest of this chapter proves the existence and almost-uniqueness of the SVD. Outside of these sections, this book generally is not heavy on the proofs. But the techniques used in these proofs should help you get a concrete sense for what the SVD is.

Exercises

Continue the example from the beginning of the chapter, write \(\left( \begin{array}{ccc} 1 & 1 & 2 \\ 3 & 5 & 8 \end{array} \right)\) in bra-ket form. (Note: You should not have to recalculate anything.)

[Ex-Confirm SVD] Confirm the calculation in Example X by multiplying and adding the bra-ket form of \(A\) and by multiplying the matrix form of \(A\). These should be the same.

Given a matrix \(A=\left(\begin{array}{ccc}1&0&0\\0&0&0\\0&0&0\end{array}\right)\) and a bra-ket form representation with a single term \(\lambda|u\rangle\langle v|\). Prove that \(\lambda=\pm 1\), \(|u\rangle=\pm|e_1\rangle\), and \(\langle v|=\pm\langle e_1|\). (This is a specific case of the almost-uniqueness that we prove in a later section.)

[Ex-Rectangular bra-ket to matrix] Given a matrix with SVD in bra-ket form: \[A=2\left(\begin{array}{c}1\\0\\0\end{array}\right)\left(\begin{array}{cc}1&0\end{array}\right) + 2\left(\begin{array}{c}0\\0\\1\end{array}\right)\left(\begin{array}{cc}0&1\end{array}\right)\] Write \(A\) in matrix-form SVD two different ways.

The orthonormality of \(U\) and \(V\) will often be useful. Given an SVD \(A=\sum_i\lambda_i|u_i\rangle\langle v_i|\), verify the following.

For \(|v\rangle = \sum_ia_i|v_i\rangle\), \(A|v\rangle=\sum_ia_i\lambda_i|u_i\rangle\).

\(\langle u_i|A|v_i\rangle=\lambda_i\).

[Ex-SVD Null Space] Given a matrix with SVD \(A=\sum_i\lambda_i|u_i\rangle\langle v_i|\), prove that \(A\vec v=0\) if and only if \(\vec v\) is perpendicular to each \(\vec v_i\).

[Ex-SVD Range] Given a matrix with SVD \(A=\sum_i\lambda_i|u_i\rangle\langle v_i|\), prove that a vector \(\vec u\) equals \(A\vec v\) for some \(\vec v\) if and only if \(\vec u\in\operatorname{span}\left(\vec u_i\right)\).

An invertible square matrix has an SVD \(A=UDV^T\), with \(D=\left(\begin{array}{cccc}\lambda_1&&&\\ &\lambda_2&&\\ &&\ddots&\\ &&&\lambda_r\end{array}\right)\). Show that all \(\lambda_i\neq0\). Next argue that \(D^{-1}=\left(\begin{array}{cccc}\frac{1}{\lambda_1}&&&\\&\frac{1}{\lambda_2}&&\\&&\ddots&\\&&&\frac{1}{\lambda_r}\end{array}\right)\). Finally prove that \(A^{-1}=VD^{-1}U^T\).

In this section, we prove the existence of an SVD. This proof is constructive, in the sense that it gives an algorithm for building a bra-ket form of an SVD.

Algorithm 1: SVD[Algorithm1]

Set \(A_0 \gets A\) While \(A_i\neq 0\)

Find the unit vectors \(\vec u _i\) and \(\vec v _i\) that maximize2 \(\langle u _i|A_{i-1}| v _i\rangle\).

Set \(A_i = A_{i-1}-| u _i\rangle\langle u _i|A| v _i\rangle\langle v _i|\)

When the algorithm finishes, we have \(A_r=0\). So that \(0=A_r=A-\sum_{i=1}^r| u _i\rangle\langle u _i|A_{i-1}| v _i\rangle\langle v _i|\). We recognize that \(\lambda_i:=\langle u _i|A_{i-1}| v _i\rangle\) is just a scalar, and add these to both sides so that we get \(A=\sum_{i=1}^r\lambda_i| u _i\rangle\langle v _i|\). The resulting vectors are normal, by choice. But for this to be an SVD as described in the first section, we need the vectors \(\left\{u_i\right\}_i\) to be a perpendicular set, and for \(\left\{v_i\right\}_i\) to be a perpendicular set. We prove that this is so in Theorem Theorem 2.7.

An objection to this algorithm may be that it only works if the while loop eventually terminates. For the algorithm to work, it must be true that \(A_r=0\) for some \(r\). In Theorem Theorem 2.8, we show that for \(A\) with dimension \(m\times n\) this must happen within \(r := \min(m, n)\) steps.

Before proving these two theorems, let’s build some intuition. We see that \(| u _i\rangle\langle u _i|A_{i-1}| v _i\rangle\langle v _i|=\lambda_i| u _i\rangle\langle v _i|\) is just a matrix; and it’s the same shape as \(A_{i-1}\). But it’s not just any matrix. It’s the matrix that captures all the signal that \(A_{i-1}\) has in the axes \(\vec u_i\) and \(\vec v_i\). To illustrate what this means, let’s first look at a lower-dimensional analogy.

Covector Analogy

Consider the axis \(\vec v=\left(\begin{array}{ccc} 3 & 3 & 2 \end{array}\right)\). If we wanted to remove all the signal along the axis \(\vec e_1 = \left(\begin{array}{ccc} 1 & 0 & 0 \end{array}\right)\), then we would subtract \(3\vec e_1 = \left(\begin{array}{ccc} 3 & 0 & 0 \end{array}\right)\) from \(\vec v\) to get \(\left(\begin{array}{ccc} 0 & 3 & 2 \end{array}\right)\).

Saying that this "removes signal" along \(e_1\) means that now any vector along \(\vec e_1\), \(\lambda\vec e_1\), gets zeroed out by \(\vec v-3\vec e_1\). That is, \(\langle v-3e_1|e_1\rangle=0\). We arrived at the coefficient \(\lambda=3\) by looking at the first element of \(\vec v\). This can be formalized as \(3=\langle v|e_1\rangle\).

Now we could repeat this with the second dimension \(3=\langle v|e_2\rangle\). So now \(v-3\langle e_1|-3\langle e_2| = \left(\begin{array}{ccc} 0 & 0 & 2 \end{array}\right)\). This covector equals zero when it pairs with any linear combination of \(|e_1\rangle\) and \(|e_2\rangle\). That is \(\left\langle\left(\begin{array}{ccc} 0 & 0 & 2 \end{array}\right)|\left(\begin{array}{ccc} \lambda & \mu & 0 \end{array}\right)\right\rangle = 0\). We can do an SVD-like algorithm to assign \(v\)’s signal to it’s components: \(v = \sum_{i=1}^3\langle v|e_i\rangle\langle e_i|\).

Moreover there’s nothing special about the standard vectors. It’s true that for any basis \(\left\{\vec v _i\right\}_{i=1}^3\), \(\langle v| = \sum_{i=1}^3\langle v| v _i\rangle\langle v _i|\). It’s further true that if we stop short, the covector zeros out encountered vectors, like \(\left(v-\langle v| v _1\rangle\langle v _1|-\langle v| v _2\rangle\langle v _2|\right)\left(\lambda| v _1\rangle+\mu| v _2\rangle\right)=0\). We say that \(\langle v| v _i\rangle\langle v _i|\) "captures all the info" from \(\langle v|\) in the direction of \(\langle v _i|\), because when subtract off that bit, there’s nothing left in that direction.

This process is known as the Gram-Schmidt process applied to orthonormal vectors. The SVD can be thought of as a generalization of this to matrices. We say that \(| u \rangle\langle u |A| v \rangle\langle v |\) captures all the info from \(A\) in the direction of \(\vec u\) and \(\vec v\), because \(\langle u |\left(A-| u \rangle\langle u |A| v \rangle\langle v |\right)| v \rangle=0\). (Check that this is true.) This isn’t a proof yet, but this is why we think to try this.

By choosing the maximizing pair of vectors for remaining signal with each step, we guarantee that we’re getting perpendicular vectors from earlier vectors. The reasoning is that if we chose some vector that was pointing in a similar direction as an earlier vector, then we’d be pointing partly in a zeroing direction. The zeroing part of the new matrix, \(| u \rangle\langle u |A| v \rangle\langle v |\), starts by projecting onto the zeroed axis; if this projection is non-zero, then some part of the vector is going to waste.

Lemma 2.3. For any matrix \(A\), if \(\langle u |A| v \rangle=\max_{\|u_i\|=\|v_i\|=1}\langle u_i |A| v_i \rangle\), then \(A| v \rangle=\lambda| u \rangle\), for some scalar \(\lambda\).

Proof. Fix the maximizing \(|v\rangle\). \(A| v \rangle\) maps to some vector. We call this vector \(\lambda|t\rangle\), where \(|t\rangle\) is a unit vector. (The magnitude has been factored out into the scalar \(\lambda\). So \(\langle u |A| v \rangle=\lambda\langle u | t\rangle\). Given that \(\langle u |\) and \(| t\rangle\) are unit vectors, the inner product is given by the cosine of the angle between them (Theorem Theorem 9.6), which is maximized when the vectors are the same. ◻

This is an important fact that helps with the next lemma. It also advances our intuition. We think of a matrix \(A\) as acting on a vector by projecting that vector onto a covector (\(\vec v\)) and using the resulting coefficient \(\lambda\) to tell us how far to go in the direction of another vector \(u\). The above lemma says that when we’ve chosen the maximizing unit vector (\(u\)) and covector (\(v\)), as prescribed by the SVD algorithm, we’ve chosen a matching pair. If we wanted to remove the effect of the projecting onto \(v\), then we need to subtract from the output some multiple of \(u\).

Note that though Lemma Lemma 2.3 doesn’t make a claim about the value of \(\lambda\), it’s easy to see that \(\lambda=\langle u |A| v\rangle\), because \(\langle u|A|v\rangle = \lambda\langle u|u\rangle = \lambda\).

Next we use this fact to see:

Lemma 2.4. Given that \(\langle u_1|\) and \(|v_1\rangle\) maximize \(\langle u|A|v\rangle\). Define \(A_1=A-\lambda_1| u _1\rangle\langle v _1|\), with \(\lambda_1=\langle u_1|A|v_1\rangle\). Then \(A_1| v _1\rangle\langle v _1|=0\).

Proof. \[\begin{array}{rcll} A_1| v _1\rangle\langle v _1| &=& \left(A-\lambda_1| u _1\rangle\langle v _1|\right)| v _1\rangle\langle v _1|& \\ &=& A| v _1\rangle\langle v _1| - \lambda_1| u _1\rangle\langle v _1| v _1\rangle\langle v _1| & \quad\text{Distribute}\\ &=& \left(A| v _1\rangle\right)\langle v _1| - \lambda_1| u _1\rangle\langle v _1|& \\ &=& \left(\lambda_1| u _1\rangle\right)\langle v _1| - \lambda_1| u _1\rangle\langle v _1|&\quad\text{By above lemma} \\ &=& 0 \end{array}\] ◻

We can immediately conclude from Lemma Lemma 2.4 that \(A_1=A_1\left(I-| v _1\rangle\langle v _1|\right)\). This says that applying \(A_1\) to a vector \(| v \rangle\) is the same as first applying \(\left(I-| v _1\rangle\langle v _1|\right)\) to \(| v \rangle\), then applying \(A_1\) to the resulting vector. \(\left(I-| v _1\rangle\langle v _1|\right)\) is a special matrix that acts on any vector by projecting onto the subspace of all vectors perpendicular to \(| v _1\rangle\). This will be justified reach the section on projection matrices. For now it’s sufficient to understand:

\(\left(I-| v _1\rangle\langle v _1|\right)| v \rangle\) is exactly \(| v \rangle\) when \(\langle v _1| v \rangle=0\). I.e. \(v\) is perpendicular to \(v _1\), and

\(\left\Vert\left(I-| v _1\rangle\langle v _1|\right)| v \rangle\right\Vert < 1\), otherwise.

The first statement is obvious. The second statement should be fairly intuitive because we’re subtracting off a component of a vector. This is analogous to zeroing out the first term of a unit vector, which will leave it smaller. A more formal proof of this point is left as Exercise [Ex-Finish Projection].

We state without justification that this also holds for more dimensions. This fact follows in much the same way as single case we’ve discussed, but the proof is tedious.

Theorem 2.5. For \(A_i\) as defined above,

\(A_i| v \rangle=A_i\left(I-\sum_{k=1}^{i-1}| v _k\rangle\langle v _k|\right)| v \rangle\)

\(\left\Vert\left(I-\sum_{k=1}^{i}| v _k\rangle\langle v _k|\right)| v \rangle\right\Vert<1\) unless \(| v \rangle\) is perpendicular to each of the earlier \(| v _k\rangle\)

Finally because the same logic can be done on the other side of the matrix, we have \(A_i=\left(I-\sum_{k=1}^i| u _k\rangle\langle u _k|\right)A_i\). So we make a more general statement.

Corollary 2.6. For \(A_i\) as defined above, \[A_i=\left(I-\sum_{k=1}^i| u _k\rangle\langle u _k|\right)A_i\left(I-\sum_{k=1}^{i}| v _k\rangle\langle v _k|\right)\]

We’re ready to prove the theorems mentioned at the opening of this chapter. First we’ll prove that the vectors \(\left\{\langle u_i|\right\}\) are perpendicular, and that \(\left\{|v_i\rangle\right\}\) are perpendicular. Specifically we argue that each new vector generated by the algorithm is perpendicular to all previous ones.

Theorem 2.7. Let \(A_i\) be as in Algorithm [Algorithm1] with \(A_i\neq0\). If \(u_i\), \(v_i\) maximize \(\langle u |A_i| v \rangle\), then \(u_i\) is perpendicular to \(u_j\) and \(v_i\) is perpendicular to \(v_j\) for all \(j\leq i\).

Proof. Suppose to the contrary that one or both of the vectors is not perpendicular, and that the maximum is \(M\).

\(M\) must be positive. To see this: \(\langle u|A_i|v\rangle\) must be non-zero for at least one pair of vectors, for otherwise \(A_i=0\). Consider any non-zero value, \(N=\langle u|A_i|v\rangle\). If \(N\) is positive, then the maximimum \(M>N>0\). Otherwise \(-N=\langle -u|A|v\rangle\) must be positive, which also implies \(M>-N>0\).

Using Corollary Corollary 2.6, we can write \(M\) as:

\[M=\langle u |A_i| v \rangle=\langle u |\left(I-\sum_{k=1}^i| u _k\rangle\langle u _k|\right)A_i\left(I-\sum_{k=1}^{i}| v _k\rangle\langle v _k|\right)| v \rangle=\langle u '|A_i| v '\rangle\]

where \(\langle u'|=\langle u |\left(I-\sum_{k=1}^i| u _k\rangle\langle u _k|\right)\) and \(|v'\rangle=\left(I-\sum_{k=1}^{i}| v _k\rangle\langle v _k|\right)| v \rangle\).

Because we assumed that either \(\vec u\) or \(\vec v\) are not perpendicular to all previous vectors, one of vectors \(u '\) or \(v '\) have magnitude less than \(1\), by Theorem Theorem 2.5, point (b).

We can now reach a contradiction, because the unit vectors \(\frac{\langle u '|}{\left\Vert\langle u '|\right\Vert}\) and \(\frac{| v '\rangle}{\left\Vert| v '\rangle\right\Vert}\) do a better job of maximizing \(\langle u|A|v\rangle\) than \(\langle u_i|\) and \(|v_i\rangle\). This is because:

\[\frac{\langle u '|}{\left\Vert\langle u '|\right\Vert}A_i\frac{| v '\rangle}{\left\Vert| v '\rangle\right\Vert} = \frac{\langle u'|A_i|v'\rangle}{\left\Vert\langle u '|\right\Vert\left\Vert| v '\rangle\right\Vert} = \frac{M}{\left\Vert\langle u '|\right\Vert\left\Vert| v '\rangle\right\Vert} > M >0\] ◻

Finally we prove that Algorithm [Algorithm1] terminates.

Theorem 2.8. If \(A\) is dimension \(m\times n\), call \(r:=\min(m,n)\), then \(A_r=0\).

Note: This doesn’t preclude \(A_{r'}=0\) for some \(r'<r\). If that happens, then the algorithm will terminate early.

Proof. Suppose we’ve gone through \(r\) steps, then it must be that the set of perpendicular covectors \(\left\{\langle u _i|\right\}\) span the codomain or the set of perpendicular vectors \(\left\{| v _i\rangle\right\}\) span the domain, depending on whether \(r=m\) or \(r=n\). Without loss of generality, say that the domain is spanned. Then \[\begin{array}{rcl} A_r&=&A_r\left(I-\sum_{i=1}^{r'}| v _i\rangle\langle v _i|\right)\\ &=&A0\\ &=&0 \end{array}\] ◻

The first equality follows from Theorem Theorem 2.5 and the second from Exercise [Ex-Outer Product Identity].

Before moving on, we’ll state a fact that we’ll need later. This is a little out of place here, except that it shows a nice application of the concepts cover in this section.

We will see in Exercise [Ex-Outer Product Identity] that for any basis \(\left\{|v_i\rangle\right\}\), we have \(I=\sum_i|v_i\rangle\langle v_i|\). And a natural extension of this is that if you had two sets of orthonormal vectors, \(\left\{|v_i\rangle\right\}\) and \(\left\{|v_i'\rangle\right\}\), spanning the same space, then \(\sum_i|v_i\rangle\langle v_i|=\sum_i|v_i'\rangle\langle v_i'|\). This is true, but we’ll an even more general statement. We will encounter in the next section sums of outer products, but they won’t be necessarily symmetric. So we need that \(\sum_i|u_i\rangle\langle v_i|=\sum_i|u_i'\rangle\langle v_i'|\). This only happens under special circumstances, but these happen to be the circumstances that arise when picking apart the SVD.

Lemma 2.9. For orthonomal sets of vectors \(\left\{\langle u_i|\right\}_{i=1}^n\), \(\left\{|v_i\rangle\right\}_{i=1}^n\), \(\left\{\langle u_i'|\right\}_{i=1}^n\), and \(\{|v_i'\rangle\}_{i=1}^n\), we have \[\sum_i|u_i\rangle\langle v_i|=\sum_i|u_i'\rangle\langle v_i'|\] If the following conditions hold:

\(\operatorname{span}\left\{\langle u_i|\right\}=\operatorname{span}\left\{\langle u_i'|\right\}\) and \(\operatorname{span}\left\{|v_i\rangle\right\}=\operatorname{span}\left\{|v_i'\rangle\right\}\).

There exists a fixed linear map \(B\) such that \(B|v_i\rangle=|u_i\rangle\) and \(B|v_i'\rangle=|u_i'\rangle\).

For the second point, there will always exist some mapping from one orthonormal set to another (of the same cardinality). The key assumption here is that the \(B\) is the same between the pairs of sets.

Proof. Call \(M=\sum_i|u_i\rangle\langle v_i|\) and \(M'=\sum_i|u_i'\rangle\langle v'|\). To prove these matrices are equal, we show that they act the same on every vector. We chose some arbitrary vector \(|v\rangle\), and show that \(M|v\rangle=M'|v\rangle\).

By Theorem Theorem 9.5, we can write \(|v\rangle=|v_\parallel\rangle+|v_\bot\rangle\) with \(W\) in the Theorem set as \(\operatorname{span}\left\{|v_i\rangle\right\}=\operatorname{span}\left\{|v_i'\rangle\right\}\). With this construction \(\langle v_i|v_\bot\rangle=0\) for each \(\langle v_i|\), so \(M|v_\bot\rangle=M'|v_\bot\rangle=0\).

Because \(|v_\parallel\rangle\in\operatorname{span}\{|v_i\rangle\}\), \(|v_\parallel\rangle=\sum_ia_i|v_i\rangle\).

\[\begin{array}{rcl} M|v\rangle &=& M|v_\parallel\rangle+M|v_\bot\rangle \\ &=& M|v_\parallel\rangle \\ &=& \left(\sum_i|u_i\rangle\langle v_i|\right)\left(\sum_ja_j|v_j\rangle\right) \\ &=& \sum_i|u_i\rangle a_i \\ &=& \sum_ia_iB|v_i\rangle \\ &=& B\left(\sum_ia_i|v_i\rangle\right) \\ &=& B|v_\parallel\rangle \end{array}\]

The same calculation with primes on all the \(M\), \(u\), and \(v\) variables gives that \(M'|v\rangle\) also equals \(B|v_\parallel\rangle\). ◻

Exercises

[Ex-Finish Projection] Given unit vectors \(\vec u\) and \(\vec v\). Prove that if \(\langle u|v\rangle\neq0\), then \(\left\Vert\left(I-|u\rangle\langle u|\right)|v\rangle\right\Vert < 1\). (Hint: For any vector \(\vec v\), \(\Vert \vec v \Vert<1\) if and only \(\langle v | v \rangle<1\).)

[Ex-Outer Product Identity] Given a basis \(\left\{\vec v_i\right\}_{i=1}^n\) of \(\mathbb R^n\), prove that \(I=\sum_{i=1}^n|v_i\rangle\langle v_i|\). That is, show for any \(\vec v\), \(\left(\sum_i|v_i\rangle\langle v_i|\right)|v\rangle=|v\rangle\).

Given a matrix with a SVD \(A=\sum_i\lambda_i|u_i\rangle\langle v_i|\), let \(\left\{A_i\right\}\) be the matrices given by Algorithm [Algorithm1]. Lemma Lemma 2.3 implies that \(A_{i-1}|v_i\rangle=\lambda_i|u_i\rangle\). Show that \(A|v_i\rangle=\lambda_i|u_i\rangle\).

Given a matrix with a SVD \(A=\sum_i\lambda_i|u_i\rangle\langle v_i|\), show that \(A=\sum_iA|v_i\rangle\langle v_i|\). (For fun, prove this two different ways.)

An annoying fact about the SVD is that it’s not unique. But it is almost unique. In this section, we see that there are limitations.

Let’s review briefly. Given a matrix \(A\), we can find a SVD, \(A=\sum_i\lambda_i|u_i\rangle\langle v_i|\). The \(\lambda_i\) do not need to be distinct. For the sake of this section, we write this as

\[\label{MultiLambdaNotation} A=\sum_i\lambda_i\sum_{j=1}^{n_i}|u_{ij}\rangle\langle v_{ij}|,\]

where \(\lambda_1>\lambda_2>\cdots\). By indexing the vectors/covectors with a second index \(j\), we can make sure that all our \(\lambda_i\) are unique.

Example

Suppose we had an SVD: \[A=2|u_1\rangle\langle v_1|+2|u_2\rangle\langle v_2|+2|u_3\rangle\langle v_3|+|u_4\rangle\langle v_4|+|u_5\rangle\langle v_5|\]

To rewrite this in the language of Equation ([MultiLambdaNotation]), we would write: \[A=2\cdot\left(|u_{11}\rangle\langle v_{11}|+|u_{12}\rangle\langle v_{12}|+|u_{13}\rangle\langle v_{13}|\right)+1\cdot\left(|u_{21}\rangle\langle v_{21}|+|u_{22}\rangle\langle v_{22}|\right)\]

So that \(\lambda_1=2\) and \(\lambda_2=1\). \(|u_1\rangle\) gets renamed to \(|u_{11}\rangle\), \(\langle v_4|\) gets remapped to \(\langle v_{21}|\), etc.

If the SVD was built with our algorithm from the previous section, then \(\langle u_{11}|A|v_{11}\rangle=\lambda_1\) maximizes \(\langle u|A|v\rangle\). Of course because \(\langle u_{1j}|A|v_{1j}\rangle=\lambda_1\) for any \(1\leq j\leq n_i\), the algorithm may just have chosen \(v_{1,2}\) or \(v_{1,3}\) first, so any reordering of these could have been produced. In fact we don’t need to be restricted to these exact vectors; for any unit vector \(|v\rangle\in\operatorname{span}\left\{|v_{i*}\rangle\right\}\), we can find a \(\langle u|\) (given by Lemma Lemma 2.3) for which \(\langle u|A|v\rangle=\lambda_1\). It also turns out that any \(|v\rangle\not\in\operatorname{span}\left\{|v_{i*}\rangle\right\}\), there is not a \(\langle u|\) for which \(\langle u|A|v\rangle=\lambda_1\). These two statements are proven in Corollary Corollary 2.11.

Together these show that if you follow the algorithm from the previous section of repeatedly finding the maximizing vectors, the only variation you can get is a change of basis in \(|v_{i*}\rangle\). (If \(n_i=1\), then this means that we can only vary \(\lambda_1|u\rangle\langle v|=\lambda_1|-u\rangle\langle -v|\).) Actually we’ve only shown this for for the largest \(\lambda=\lambda_1\), but by subtracting off these components and applying the same argument to the resulting difference with a new largest \(\lambda=\lambda_2\), we can apply the same argument; this is a trick we’ll use repeatedly.

So running the algorithm won’t give you the exact same answer each time you do it. It may vary, but only in choosing different a different basis for each \(\left\{|v_{i*}\rangle\right\}\). But is it possible to use some other algorithm to come up with a SVD? And maybe if you did, you’d get a much different SVD? We show in this section that no matter how you come up with an SVD it must be the same as one of the SVDs created by the algorithm. That is, it can only vary by a change of basis for each spanning space \(\operatorname{span}\left\{\langle u_{i*}|\right\}\) and \(\operatorname{span}\left\{|v_{i*}\rangle\right\}\).

Theorem 2.10. Given an SVD of a matrix, \(A=\sum_i\lambda_i|u_i\rangle\langle v_i|\), then for any \(\vec u\), \(\vec v\), \(\langle u|A|v\rangle\leq\lambda_1\).

Proof. Using Lemma Lemma 2.3, we get that

\[\max_{\|u\|=\|v\|=1}\langle u|A|v\rangle = \max_{\|v\|=1}\frac{\langle v|A^T}{\lambda}A|v\rangle = \max_{\|v\|=1} \frac{\langle v|A^TA|v\rangle}{\left\|A|v\rangle\right\|}\]

For any vector \(\vec w\), \(\left\|\vec w\right\|=\sqrt{\vec w^T\vec w}\). So,

\[\max_{\|v\|=1} \frac{\langle v|A^TA|v\rangle}{\left\|A|v\rangle\right\|} = \max_{\|v\|=1} \frac{\langle v|A^TA|v\rangle}{\sqrt{\langle v|A^TA|v\rangle}} = \max_{\|v\|=1} \sqrt{\langle v|A^TA|v\rangle}\]

A common trick is to notice that the square root of a value is maximal exactly when the value itself is maximal. So that the same \(|v\rangle\) that maximizes \(\langle u|A|v\rangle\) also maximizes \(\langle v|A^TA|v\rangle\).

So to maximize \(\langle v|A^TA|v\rangle\), we use the fact that \(A^TA=\sum_i\lambda_i^2|v_i\rangle\langle v_i|\) (Lemma Lemma 2.2). If \(\left\{\vec v_i\right\}\) do not span the domain (with dimension \(=n\)), then we pad it with additional basis vectors, and \(\lambda_i=0\). \(A^TA=\sum_{i=1}^n\lambda_i^2|v_i\rangle\langle v_i|\). Now \(\left\{\vec v_i\right\}_{i=1}^n\) is a basis so that any unit vector \(|v\rangle = \sum_{i=1}^na_i|v_i\rangle\), with \(\sum_{i=1}^na_i^2 =1\).

\[\label{UpperboundEquation} \begin{array}{rcl} \langle v|A^TA|v\rangle &=& \langle v|\left(\sum_i\lambda_i^2|v_i\rangle\langle v_i|\right)|v\rangle \\ &=& \left(\sum_i a_i\langle v_i|\right)\left(\sum_i\lambda_i^2|v_i\rangle\langle v_i|\right)\left(\sum_i a_i|v_i\rangle\right) \\ &=& \sum_ia_i^2\lambda_i^2 \leq \sum_ia_i^2\lambda_1^2 = \lambda_1^2 \end{array}\]

The inequality on the last line follows because \(\lambda_1\) is larger than all the other \(\lambda_i\).

Putting it all together, we have:

\[\langle u|A|v\rangle\leq\max_{\|u\|=\|v\|=1}\langle u|A|v\rangle=\max_{\|v\|=1}\sqrt{\langle v|A^TA|v\rangle}\leq\sqrt{\lambda_1^2}=\lambda_1\] ◻

Notice, that by eliminating the \(\langle u|\), the problem of maximizing became easier. After finding \(|v\rangle\), we could use Lemma Lemma 2.3 to find \(\langle u|\). But by symmetry, we could have instead found the \(\langle u|\) that maximizes \(\langle u|AA^T|u\rangle\).

While the theorem gives an upper bound of \(\lambda_1\), note that this bound is achievable, namely with \(\vec u_1\) and \(\vec v_1\). But we can be a little more specific:

Corollary 2.11. Given a matrix with SVD, \(A=\sum_i\lambda_i\sum_{j=1}^{n_i}|u_{ij}\rangle\langle v_{ij}|\) (with \(\lambda_1>\lambda_2>\cdots\)) and a unit vector \(|v\rangle\), \(\max_{\|u\|=1}\langle u|A|v\rangle=\lambda_1\) if and only if \(|v\rangle\in\operatorname{span}\left\{|v_{i*}\rangle\right\}\).

We skip some of the details to this proof. It amounts to observing that the equality at the end of Eq. ([UpperboundEquation]) is strict unless all the non-zero terms have \(\lambda_i\) coefficients equal to \(\lambda_1\).

Proof. Redo the work of Lemma Lemma 2.2, but this time with the SVD written as \(A=\sum_i\lambda_i\sum_{j=1}^{n_i}|u_{ij}\rangle\langle v_{ij}|\) to get

\[A^TA=\sum_i\lambda_i^2\sum_{j=1}^{n_i}|v_{ij}\rangle\langle v_{ij}|\]

Write \(|v\rangle=\sum a_{ij}|v_{ij}\rangle\). Because \(|v\rangle\) is a unit vector, \(\sum a_{ij}^2=1\). By the same steps logic as Eq. ([UpperboundEquation]), we get that

\[\langle v|A^TA|v\rangle = \sum_ia_i^2\lambda_i^2\]

where \(a_i^2=\sum_ja_{ij}^2\). Again we have that the coefficients must satisfy \(\sum_ia_i^2=1\), but this time the coefficients are distinct, so it’s clear that this equals \(\lambda_1\) if and only if \(a_1=1\) and all others are \(0\). But this happens if and only if \(|v\rangle\in\operatorname{span}\left\{|v_{1*}\rangle\right\}\). ◻

Some words should be said about Theorem Theorem 2.10 and Corollary Corollary 2.11. We’ll see in a bit that this is used to prove the almost-uniqueness of the SVD. In many ways, this theorem is the keystone of the entire chapter; it connects all the concepts. We needed to first prove the existence of an SVD to prove it. But we now see a motivation for Algorithm [Algorithm1]. Until now, the singular values seemed like an artifact of the specific SVD representation. But now we see that the first singular value \(\lambda_1\) is something fundamental about the matrix - it’s an upper bound that doesn’t depend on the SVD representation. (By repeated subtraction, all singular values are representation-independent.) So it make sense that we should try to find and use these singular values. Looking ahead, the next chapter shows how to calculate the vectors \(|v_i\rangle\). That calculation relies entirely on the trick (first encountered in Theorem Theorem 2.10) of canceling the \(\langle u_i|\) by taking \(A^TA\).

Theorem Theorem 2.10 and its corollary are also perhaps the most surprising. It seems sort of like magic. Maybe you’re along for the ride when I said that we’ll take \(A^TA\) to cancel out the \(|u_i\rangle\), but it seems like luck when the you end up with \(\sum_ia_i^2\), which just happened to be \(1\), by nature of looking at unit vectors. Maybe there’s some magic here. But at the same time, matrices are sometimes thought of as quadratic operators. For a vector \(\vec x=\left(\begin{array}{c}x_1\\x_2\\\vdots\\x_n\end{array}\right)\) and an \(n\times n\) matrix \(M\), \(\vec x^TM\vec x\) has every power-\(2\) term of \(x\) with coefficients derived from \(M\). (Check this.) Given this quadratic nature, maybe it’s less surprising that it’d take vector components to their magnitude.

Imagine that your matrix \(A\) is a diagonal matrix. This is already an SVD with \(D=A\) and \(U=V=I\). Look at how obvious the logic here is now. With some \(\vec x=\left(\begin{array}{c}x_1\\x_2\\\vdots\\x_n\end{array}\right)\), \(\langle x|D|x\rangle=\sum_i\lambda_ix_i^2\). If you wanted the first singular value, \(\lambda_1\), the largest value, then you need to do something to get \(A_{11}\). Of course \(A_{11}=\langle e_1|A|e_1\rangle\) would do the trick. When \(U\) and \(V\) aren’t the identity then you should do \(\langle e_1|U^T\) and \(V|e_1\rangle\) to cancel. The existence of the SVD just says that aside from these little twists, \(U\) and \(V^T\), all matrices are just these canonical quadratic forms.

With that digression behind us, we can now actually prove almost-uniqueness. All the hard work has been done already. This theorem brings together lots of ideas, so take your time with it.

Theorem 2.12. Given two SVDs of a matrix \(A=\sum_i\lambda_i\sum_{j=1}^{n_i}|u_{ij}\rangle\langle v_{ij}|=\sum_i\lambda_i'\sum_{j=1}^{n_i'}|u_{ij}'\rangle\langle v_{ij}'|\), then for each \(i\):

\(\lambda_i=\lambda_i'\)

\(n_i=n_i'\)

\(\operatorname{span}\left\{\vec u_{i*}\right\}=\operatorname{span}\left\{\vec u_{i*}'\right\}\)

\(\operatorname{span}\left\{\vec v_{i*}\right\}=\operatorname{span}\left\{\vec v_{i*}'\right\}\)

Proof. We first prove the four statements for \(i=1\).

Theorem Theorem 2.10 tells us that the largest value of \(\langle u|A|v\rangle\) is the largest singular value for any SVD. Because these SVDs represent the same matrix, \(A\), it follows that these two singular values are equal, \(\lambda_1=\lambda_1'\). So (1) is true.

Because \(\langle u_{1j}|A|v_{1j}\rangle=\lambda_1=\lambda_1'\) for each \(j\), Corollary Corollary 2.11 tells us that \(\langle u_{1j}|\in\operatorname{span}\left\{\langle u_{1*}'|\right\}\) and \(|v_{1j}\rangle\in\operatorname{span}\left\{|v_{1*}'\rangle\right\}\). Since spans are closed under linear combinations, it follows also that \(\operatorname{span}\left\{\langle u_{1*}|\right\}\subset\operatorname{span}\left\{\langle u_{1*}'|\right\}\) and \(\operatorname{span}\left\{|v_{1*}\rangle\right\}\subset\operatorname{span}\left\{|v_{1*}'\rangle\right\}\). Applying the same argument in the other direction, we get the subset going the other direction, so that by double inclusion, we can conclude that \(\operatorname{span}\left\{\langle u_{1*}|\right\}=\operatorname{span}\left\{\langle u_{1*}'|\right\}\) and \(\operatorname{span}\left\{|v_{1*}\rangle\right\}=\operatorname{span}\left\{|v_{1i*}'\rangle\right\}\). This proves (3) and (4).

Because \(\left\{|v_{1*}\rangle\right\}\) are orthonormal, the dimension of their span is the number of elements in the set, \(n_1\). The span of \(\left\{|v_{1*}'\rangle\right\}\) is the same space, so the same dimension. But this time the dimension is the number of elements in this orthonormal set, \(n_1'\). It follows that \(n_1=n_1'\), which proves (2).

We’ve only proven this for the first singular value. This is because Theorem Theorem 2.10 and Corollary Corollary 2.11 are only proven for the maximum singular value. We want to use the usual trick of subtracting that signal out and repeating the argument on the resulting matrix, repeatedly. Usually we gloss over this detail, but here requires a little more care. We subtract \(A-\lambda_1\sum_{j=1}^{n_i}|u_{ij}\rangle\langle v_{ij}|\). On the representation \(\sum_{i=1}^n\lambda_i\sum_{j=1}^{n_i}|u_{ij}\rangle\langle v_{ij}|\), this just removes the first summand.

\[A-\lambda_1\sum_{j=1}^{n_i}|u_{ij}\rangle\langle v_{ij}|=\sum_{i=2}^n\lambda_i\sum_{j=1}^{n_i}|u_{ij}\rangle\langle v_{ij}|\]

However for the other representation, we have something messier.

\[A-\lambda_1\sum_{j=1}^{n_i}|u_{ij}\rangle\langle v_{ij}|=\lambda_1\left(\sum_{j=1}^{n_j}|u_{ij}'\rangle\langle v_{ij}'|-\sum_{j=1}^{n_j}|u_{ij}\rangle\langle v_{ij}|\right)+\sum_{i=2}^n\lambda_i'\sum_{j=1}^{n_i'}|u_{ij}'\rangle\langle v_{ij}'|\]

In order to repeat the above argument, we need to reduce this to an SVD again. We use Lemma Lemma 2.9 with \(B=\frac{1}{\lambda_1}A\) to conclude that \(\sum_{j=1}^{n_j}|u_{ij}'\rangle\langle v_{ij}'|=\sum_{j=1}^{n_j}|u_{ij}\rangle\langle v_{ij}|\), which cancels out the \(\lambda_1\) terms from the above, resulting in:

\[A-\lambda_1\sum_{j=1}^{n_i}|u_{ij}\rangle\langle v_{ij}|=\sum_{i=2}^n\lambda_i'\sum_{j=1}^{n_i'}|u_{ij}'\rangle\langle v_{ij}'|\]

Now we have the two SVDs of \(A-\lambda_1\sum_{j=1}^{n_i}|u_{ij}\rangle\langle v_{ij}|\), \(\sum_{i=2}^n\lambda_i'\sum_{j=1}^{n_i'}|u_{ij}'\rangle\langle v_{ij}'|\) and \(\sum_{i=2}^n\lambda_i\sum_{j=1}^{n_i}|u_{ij}\rangle\langle v_{ij}|\). So we can repeat the above arguments on this. Subtract, and repeat again, and so on. ◻

This tells you is that no matter how you obtain the SVD, it will be in basically the same form. It will have the same singular values with the same multiplicity, and the only difference is that there may be a different basis chosen for the vectors.

This is a lot of work to show almost-uniqueness, but by now we should start to have some intuition for what the SVD is. Firstly, the singular values are something fundamental about the matrix, and not something that depends on the SVD representation. Secondly, our algorithm from the previous section is a rational thing to try. The largest singular value defines a space where these values can occur, but then after you subtract out that dimension, you get a big jump down to the next singular value.

Exercises

With an SVD given by Eq. ([MultiLambdaNotation]), show that if \(|v\rangle\in\operatorname{span}\{|v_{i*}\rangle\}\), then \(A|v\rangle\in\operatorname{span}\{|u_{i*}\rangle\}\).

Given a matrix with SVD \(A=\sum_i\lambda_i|v_i\rangle\langle v_i|\), show that for any \(n\in\mathbb N\), \(A^n=\sum_i\lambda_i^n|v_i\rangle\langle v_i|\).

Given a matrix with a SVD \(A=\sum_i\lambda_i|u_i\rangle\langle v_i|\), show that \(A=\left(\sum_i|u_i\rangle\langle u_i|\right)A\left(\sum_j|v_j\rangle\langle v_j|\right)\) by distributing the sums and arguing that \(\langle u_i|A|v_j\rangle\) equals \(\lambda_i\) if \(i=j\) and \(0\) otherwise. How could you show this equality without distributing?

Given an invertible square matrix \(A\) with minimum singular \(\lambda_n\), prove that for any unit vectors \(\vec u\) and \(\vec v\), \(\langle u|A|v\rangle\geq\lambda_n\).

Given a matrix \(A\), prove that for any value \(t\) between the smallest and largest singular value \(\lambda_n\leq t\leq\lambda_1\), there are some unit vectors \(\langle u|\) and \(|v\rangle\) for which \(\langle u|A|v\rangle=t\).

So far we haven’t said how to actually calculate an SVD. Algorithm [Algorithm1] says to repeatedly find maximal vectors, but we haven’t said how to do this. In this section we show that this calculation amounts to calculating certain eigenvalues and eigenvectors. In practice, you could calculate the SVD using a computer program. (See Section 3.1.) Yet knowing how to calculate manually will advance our understanding of the SVD.

Before going on, we need a small fact about calculus. I’ll state a lemma here without proof. Calculus is outside the scope of this book, but I provide some discussion in Appendix X.

Lemma 2.13. For any square matrix \(B\),

\[\frac{\partial}{\partial|v\rangle}\langle v|B|v\rangle = 2B|v\rangle\]

The left-hand side of this equation is a vector whose entries equal \(\frac{\partial\langle v|B|v\rangle}{\partial v_i}\). This lemma seems simple. (It’s analogous to \(d(bx^2)=2x\).). Indeed, it could be demonstrated with basic calculus, but the calculation is slightly more difficult than you may guess.

We saw in the last section that we could find \(|v_1\rangle\) in \(A=\sum_i\lambda_i|u_i\rangle\langle v_i|\) as the unit vector \(|v\rangle\) that maximizes \(\langle v|A^TA|v\rangle\). To calculate this max, we can do some calculus, but we have to be careful to restrict to unit vectors (\(\langle v|v\rangle=1\)). To enforce this, we use Lagrange multipliers. (We call the Lagrange multiplier \(\varepsilon\) instead of the usual \(\lambda\) to avoid confusion with singular values.)

\[\langle v|A^TA|v\rangle-\lambda\langle v|v\rangle=\langle v|\left(A^TA-\varepsilon I\right)|v\rangle\]

By taking the derivative using Lemma Lemma 2.13 and setting to zero, we get

\[\left(A^TA-\varepsilon I\right)|v_1\rangle=0\]

That is, \(|v\rangle_1\) is an eigenvector of \(A^TA\). Though this doesn’t tell us which eigenvector, we can check all eigenvectors until we get a maximum. Similarly \(|u_1\rangle\) is an eigenvector of \(AA^T\).

If the eigenvalue corresponding to \(|v_1\rangle\) is \(\varepsilon_1\), then \(\langle v_1|A^TA|v_1\rangle=\varepsilon_1\). From the discussion in the proof of Theorem Theorem 2.10,

\[\lambda_1=\langle u_1|A|v_1\rangle=\sqrt{\langle v_1|A^TA|v_1\rangle}=\sqrt{\varepsilon_1}\]

It’s not surprising that these vectors are eigenvectors of \(A^TA\), because recall that

\[A^TA=\sum_i\lambda_i^2|v_i\rangle\langle v_i|.\]

It’s clear to see that \(A^TA|v_i\rangle=\lambda_i^2|v_i\rangle\), which makes it an eigenvector.

Yet another way to see this to write \(A=UDV^T\), so that \(A^TA=VD^2V^T\). Because \(V^T\) rotates the domain. You can see this as a rotation, a stretch or squeeze along the axes, then a rotation back (\(V\)). \(V^T\) maps each of the vectors \(\vec v_i\) to the axes, and \(V\) maps the axes back to the vectors \(\vec v_i\). It clear to see then that \(VD^2V^T\) maps each \(\vec v_i\) to some \(\varepsilon_i\vec v_i\).

Exercises

Using the eigenvector technique presented in this section, calculate the SVD of \(\left(\begin{array}{cc}2&6\\1&1\end{array}\right)\).

Prove that the eigenvalues of \(A^TA\) form the same multiset as the eigenvalues of \(AA^T\).

Given a matrix \(A\), let \(\left\{A_i\right\}\) be the matrices found in Algorithm [Algorithm1]. Show that for any \(j>i\) an eigenvector corresponding to a non-zero eigenvalue of \(A_j\) is also an eigenvector of \(A_i\).

Given a symmetric matrix \(A\) with distinct singular values, prove that every SVD has the form \(\sum_i\lambda_i|v_i\rangle\langle v_i|\).

Though it took a lot of work to build some understanding of the SVD, we will use it throughout the book. Next chapter, we can discuss a number of applications of the SVD. For now, I will whet your appetite with some immediate conclusions.

Theorem 2.14. If \(A\) is an \(m\times n\)-dimensional matrix, then \(\operatorname{Dim}\left(\operatorname{Range}(A)\right)+\operatorname{Dim}\left(\operatorname{NullSpace}(A)\right)=n\).

Proof. Choose some SVD of \(A=\sum_{i=1}^r|u_i\rangle\langle v_i|\). Expand \(\left\{|v_i\rangle\right\}_{i=1}^r\) to a basis of the domain by adding vectors \(|v_{r+1}\rangle, |v_{r+2}\rangle, ..., |v_n\rangle\). \(\operatorname{Range}(A)=\operatorname{Span}\left\{\langle u_i|\right\}_{i=1}^r\). And \(\operatorname{NullSpace}(A)=\operatorname{Span}\left\{|v_i\rangle\right\}_{i=r+1}^n\). (See Exercises [Ex-SVD Null Spaceercise] and [Ex-SVD Rangeercise].) So the equality follows. ◻

Theorem 2.15. Let \(A\) be an \(m\times m\)-dimensional matrix with \(m\) (not-necessarily-distinct) singular values, \(\lambda_1, ..., \lambda_m\). Then \(|A|=\prod_{i=1}^n\lambda_i\).

Proof. Choose an SVD, \(A=UDV^T\). Because determinant distributes over matrix multiplication, \(|A|=|U|\cdot|D|\cdot|V^T|\). Because they’re orthogonal, \(|U|=|V^T|=1\). So \(|A|=|D|\). \(D\) is a diagonal matrix with entries \(\lambda_1, ..., \lambda_m\). The usual formula for determinants on a diagonal matrix yields a product on the diagonal terms. ◻

There’s another way to understand this. The orthogonal matrices \(U\) and \(V^T\) are isometries. This means that they preserve distances, and importantly, volumes. The determinant can be understood as the volume of the image of a unit cube. With a \(UDV^T\) transformation, we see the cube gets rotated (\(V^T\)), stretched or squashed (\(D\)) in a way that maps unit cubes to cubes of volume \(|D|\), then rotates again (\(U\)). The rotations don’t affect the volume, so the unit cube gets mapped to some cube of volume \(|D|\).

Theorem 2.16. Given a matrix with SVD, \(X=\sum_i\lambda_i|u_i\rangle\langle v_i|\), the span of the columns of \(X\) is the span of \(\left\{|u_i\rangle\right\}_i\), and the span of the rows of \(X\) is the span of \(\left\{|v_i\rangle\right\}_i\).

Proof. TODO ◻

Exercises

In some contexts, a matrix norm is defined as \[\|A\|=\max_{|v\rangle\neq0}\frac{\|A|v\rangle\|}{\||v\rangle\|}.\] Given an SVD, \(A=\sum_i\lambda_i|u_i\rangle\langle v_i|\), show that \(\|A\|=\lambda_1\).

Call \(\sigma_1(A)\) the largest singular value of \(A\). Prove that \(\sigma_1(A+B)\leq\sigma_1(A)+\sigma_1(B)\).

Let \(\mathcal F\) be the vector space spanned by \[\left\{|\cos(x)\rangle, |\cos(2x)\rangle, |\cos(3x)\rangle, |\sin(x)\rangle, |\sin(2x)\rangle, |\sin(3x)\rangle\right\}.\] Show that the determinant \(D\) is linear on this space. Define the inner product on this space as \(\langle u(x)|v(x)\rangle=\frac{1}{\pi}\int_{x=-\pi}^\pi u(x)v(x)\operatorname{d}x\). Show that \(|D|=36\).

For the applications we discuss, the work will be done with computers. Though we won’t focus on programming in this book, there will be exercises with computer work.

If you have a language of choice, feel free to use that. If not, I recommend Python. For a quick start, I recommend using Google Colab to make small Python notebooks.

To do an SVD in Python is easy:

import numpy as np

A = ... # Make matrix as a numpy array or a list of lists

U, d, VT = np.linalg.svd(A)

D = np.diag(d)Some exercises will involve a deeper dive on the programming side. For these problems, you may need to look up and learn about libraries not covered in this book. I label these problems with a \(\diamondsuit\).

Exercises

For these exercises, we use

\[A = \left(\begin{array}{ccc} 1 & 2 & 3 \\ 0 & 3 & 5 \\ 3 & 6 & 9 \end{array}\right)\]

Use the computer to calculate the SVD of \(A\).

Use the computer to multiply \(U\), \(D\), and \(V^T\). Verify that it’s the same as \(A\).

Verify that \(U\) and \(V^T\) are orthogonal.

Write this in bra-ket notation.

[Ex-Irrational Summands] Use the computer to multiply out the summands in the bra-ket notation. Notice that these are not rational number despite the sum being whole.

Use the bra-ket notation to find the null space.

Famously Netflix created a prize several years ago, which paid anyone who could beat their recommendation algorithm by more than ten percent. The winner of the prize used collaborative filtering.

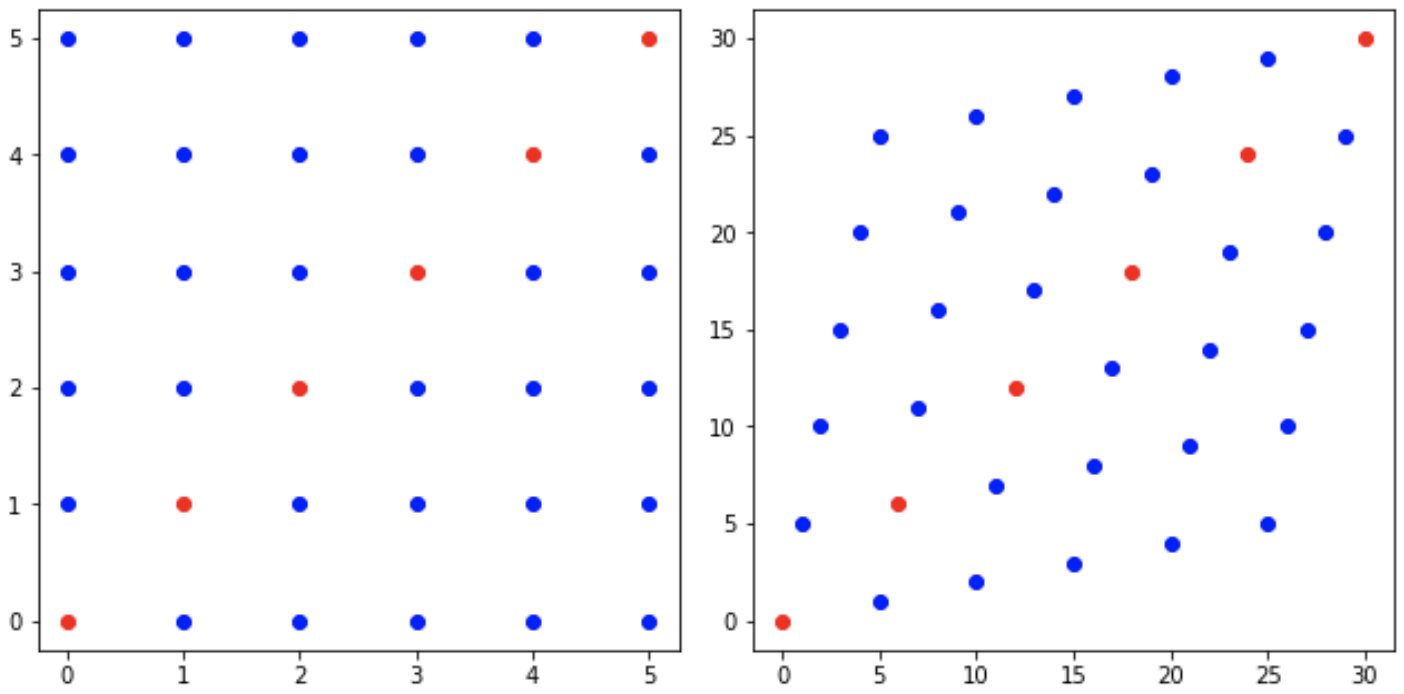

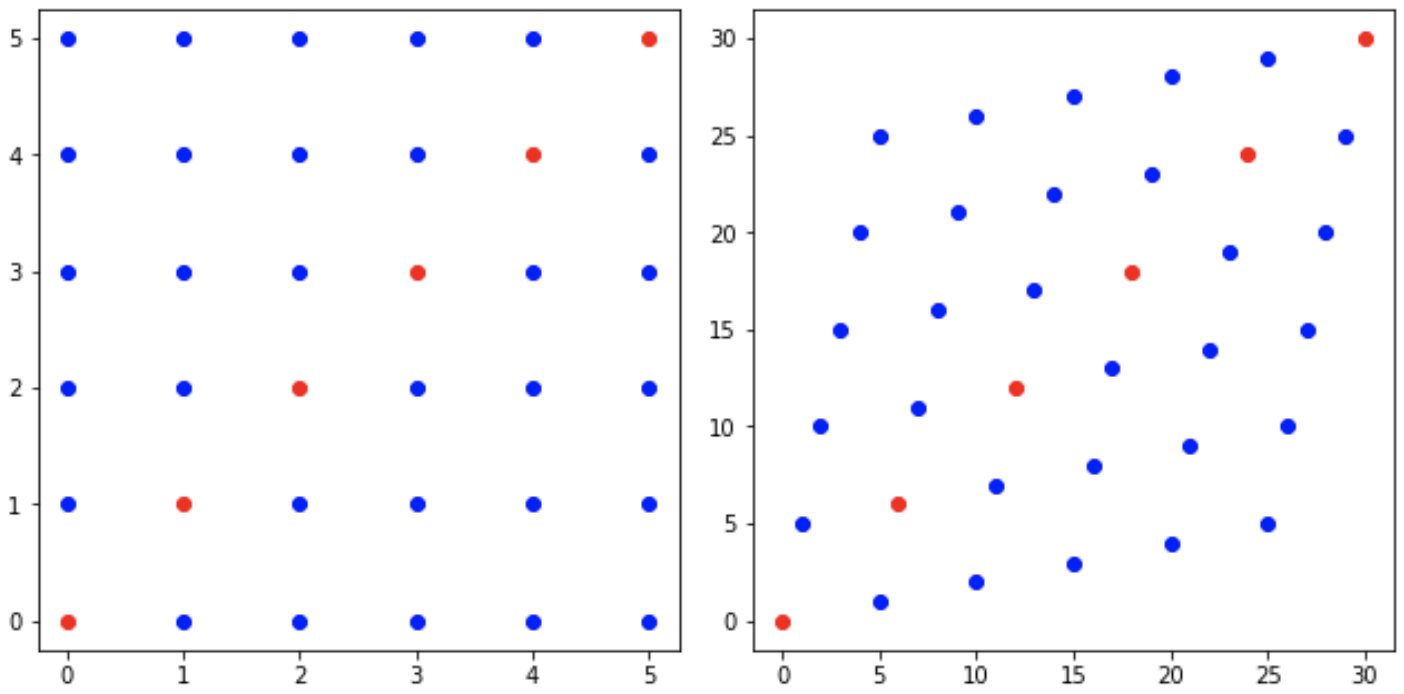

Netflix streams movies to a large number of users. Most of those streams originate from Netflix’s recommendations. One way to think of recommendation is a map from users to movies. We might say that this is a linear map, so that this is just a giant matrix.3 But before we’re ready to talk about a recommendation matrix, let’s instead start with a "watch matrix" \(W\). \(W_{ij}=1\) if user \(i\) watched movie \(j\), and \(0\) otherwise.

This isn’t very useful yet, but let’s try to understand this better. When trying to understand a matrix, we could try taking the SVD.

\[W=\sum_i\lambda_i|m_i\rangle\langle u_i|\]

This decomposition has millions of summands, and not all of them are equally important. The vectors are always unit vectors, but the \(\lambda_i\) will typically vary quite a bit. Some will be very large, while others will be relatively small.

An observation is that if you had a very tiny \(\lambda_n\) and you omitted the summand from the SVD (call it \(A'\)), you’d get a matrix that is very similar. This is similar in the sense that for all \(|v\rangle\), \(A|v\rangle\approx A'|v\rangle\).

But who cares? Well, this gives a good way to smooth out a linear map. This is sometimes called a low-rank approximation. Similar to fitting a polynomial to a scatterplot, a low-rank approximation can help capture the signal through the noise. And experience has shown that this works quite well.

But why choose this particular low-rank matrix? Well we’d want \(\left\|A|v\rangle-A'|v\rangle\right\|\) to be small as often as possible. Let’s again use the SVD \(A=\sum_i\lambda_i|u_i\rangle\langle v_i|\), and for the sake of this example. What’s the best rank-\(1\) matrix \(A'\) to approximate this? It turns out that \(\left\|A|v\rangle-A'|v\rangle\right\|\) is minimal for an "average" \(|v\rangle\) precisely when \(A'\) is the first summand of the SVD of \(A\).

Theorem 3.1. Given a matrix with SVD, \(A=\sum_i\lambda_i|u_i\rangle\langle v_i|\), then \[\min_{A'\text{ rank-$1$}}E_{|v\rangle}\left(\left\|A|v\rangle-A'|v\rangle\right\|\right)\] is achieved when \(A'=\lambda_1|u_1\rangle\langle v_1|\).

Proving Theorem Theorem 3.1 would need a better definition of "average" and would be too much of a diversion to prove here, but we do leave a simplified version as Exercise [Ex-CFMinimizes Simplified]. Applying this theorem repeatedly we see that the best low-rank approximation we can get for a matrix is to just truncate the SVD after a number of the largest summands. Very cool!

Let’s talk about Netflix again. \(W\) is a large \(m\times n\), where \(m\) is the number of users and \(n\) is the number of movies. The rank is probably close to \(n\), which is probably over ten thousand. But what if we low-rank approximated \(W\) with a \(100\)-dimensional:

\[W'=\sum_{i=1}^{100}\lambda_i|m_i\rangle\langle u_i|\]

Maybe this captures signal through the noise, but what practically does it give us? You’ll remember from Exercise [Ex-Irrational Summands] that the summands of the SVD usually aren’t rational, even if their sum is. \(W\) mapped users to movies, but it wasn’t very useful, because it only told us what movies a user already watched. \(W'\) maps users to movies, but it gives an irrational value for every movie, which gives us a total ranking of movies. Now we can tell you not just what a user watched, but what they’re likely to have watched. By recommending movies that the user hasn’t watched, but score high for, they’re likely to want to watch.

This low-rank approximation is called collaborative filtering. The \(j\)-th row in the resulting matrix represents an interest profile for each user, with one entry for every movie. This translates to recommendations by simply looking for those movies with the highest score. There’s a lot of conversation in this section, but the algorithm is really nothing more than a truncated SVD.

Some form of Collaborative Filter won the Netflix prize. Though conceptually simple when stood up against some of today’s complex models, it performed quite well. I should point out that they trained this not on \(W\), but on \(S\). Where \(S_{ij}\) represents the star rating that user \(j\) gave to movie \(i\). (That is, users manually rated movies from 1 star to 5 stars.) If you were working at Netflix today and tried to build this, the choice between \(W\) and \(S\) would be a modeling decision you’d have to make.

As it happens, SVD is very good at getting meaningful signal. The summands of the SVD end up being meaningful genres. You might get a dimension that represents action movies, which scores high for action movies and low for non-action movies. Or you might get a Kurt Russell dimension which is near \(1\) for all Kurt Russell movies and near \(0\) for all others. The values \(\langle m|m_i\rangle\) end up being a value which describes how well the the movie \(\langle m|\) fits into the \(i\)-th genre, and the values \(\langle u_i|u\rangle\) describe how much the user \(|u\rangle\) is interested in the \(i\)-th genre.

Continuing our example, say that our low-rank matrix has two genres action and Kurt Russell (\(KR\)), and say that both \(\lambda=1\). So that

\[W'=|m_{action}\rangle\langle u_{action}|+|m_{KR}\rangle\langle u_{KR}|\]

Let’s say our movie library includes Overboard (\(\mathcal O\)), Escape From New York (\(\mathcal E\)), Frankenstein (\(\mathcal F\)), and Rambo (\(\mathcal R\)). (Sorry to anyone that hates movies.) Let’s also assume the following genre assignments:

\[\begin{array}{lll} \langle m_{\mathcal O}|m_{action}\rangle=0&\quad\quad&\langle m_{\mathcal O}|m_{KR}\rangle=1 \\ \langle m_{\mathcal E}|m_{action}\rangle=1&\quad\quad&\langle m_{\mathcal E}|m_{KR}\rangle=1 \\ \langle m_{\mathcal F}|m_{action}\rangle=0&\quad\quad&\langle m_{\mathcal F}|m_{KR}\rangle=0 \\ \langle m_{\mathcal R}|m_{action}\rangle=1&\quad\quad&\langle m_{\mathcal R}|m_{KR}\rangle=0 \end{array}\]

Now assume you’re a user who is three times more interested in action movies than in Kurt Russell. That is:

\[\begin{array}{lll} \langle u_{action}|u\rangle=3&\quad\quad&\langle u_{KR}|u\rangle=1 \end{array}\]

Then we see that something like Rambo may score pretty high for this user:

\[\langle m_{\mathcal R}|W'|u\rangle = \langle m_{\mathcal R}|m_{action}\rangle\langle u_{action}|u\rangle + \langle m_{\mathcal R}|m_{KR}\rangle\langle u_{KR}|u\rangle = 1\cdot 3 + 0\cdot 1 = 3\]

And though we’ll see that the user has no interest in Frankenstein, \(\langle m_{\mathcal F}|W'|u\rangle=0\), they’ll have some mild interest in Overboard, \(\langle m_{\mathcal O}|W'|u\rangle=1\). Yet, because these features are additive, this user will have the most interest in Escape From New York, a movie that is both an action movie and stars Kurt Russell, \(\langle m_{\mathcal E}|W'|u\rangle=4\).

Collaborative Filtering is also smart enough to figure out how two weak signal should be considered more highly than a single strong signal. For the sake of argument say that Death Proof (\(\mathcal D\)) is rated lower for Kurt Russell, because he’s not the lead, \(\langle m_{\mathcal D}|m_{KR}\rangle = 0.5\), and is rated lower for action, because it’s a bit of a genre-bender, \(\langle m_{\mathcal D}|m_{action}\rangle = 0.7\). We can do the calculation to find that \(\langle m_{\mathcal D}|W'|u\rangle = 0.7\cdot 3+0.5\cdot 1 = 2.6\). So it should be ranked above Overboard but below Rambo, closer to Rambo.